Deep Learning Techniques Neural Networks Simplified

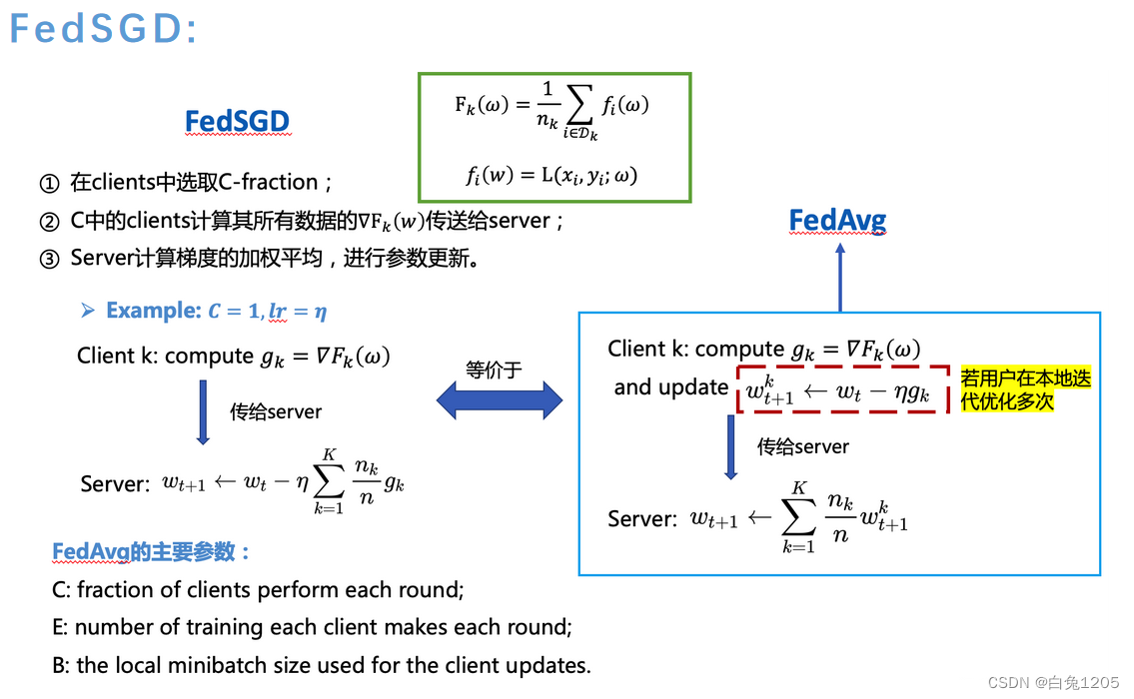

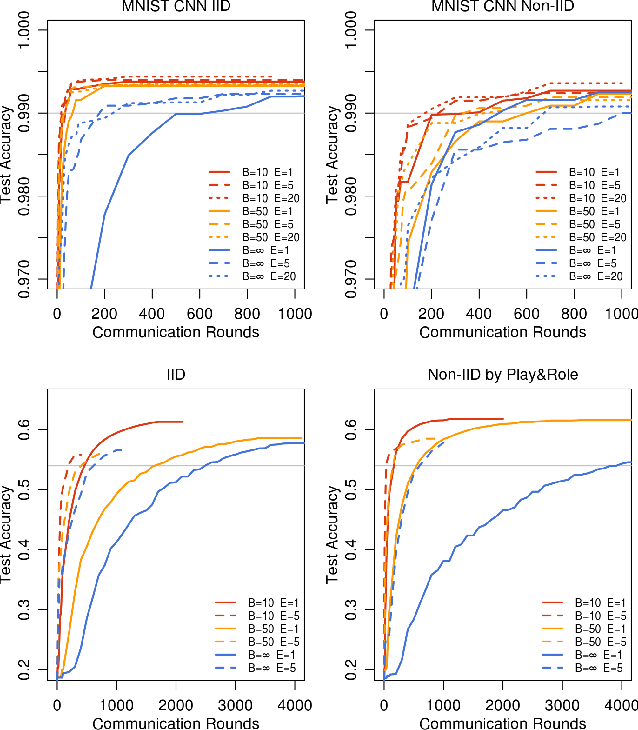

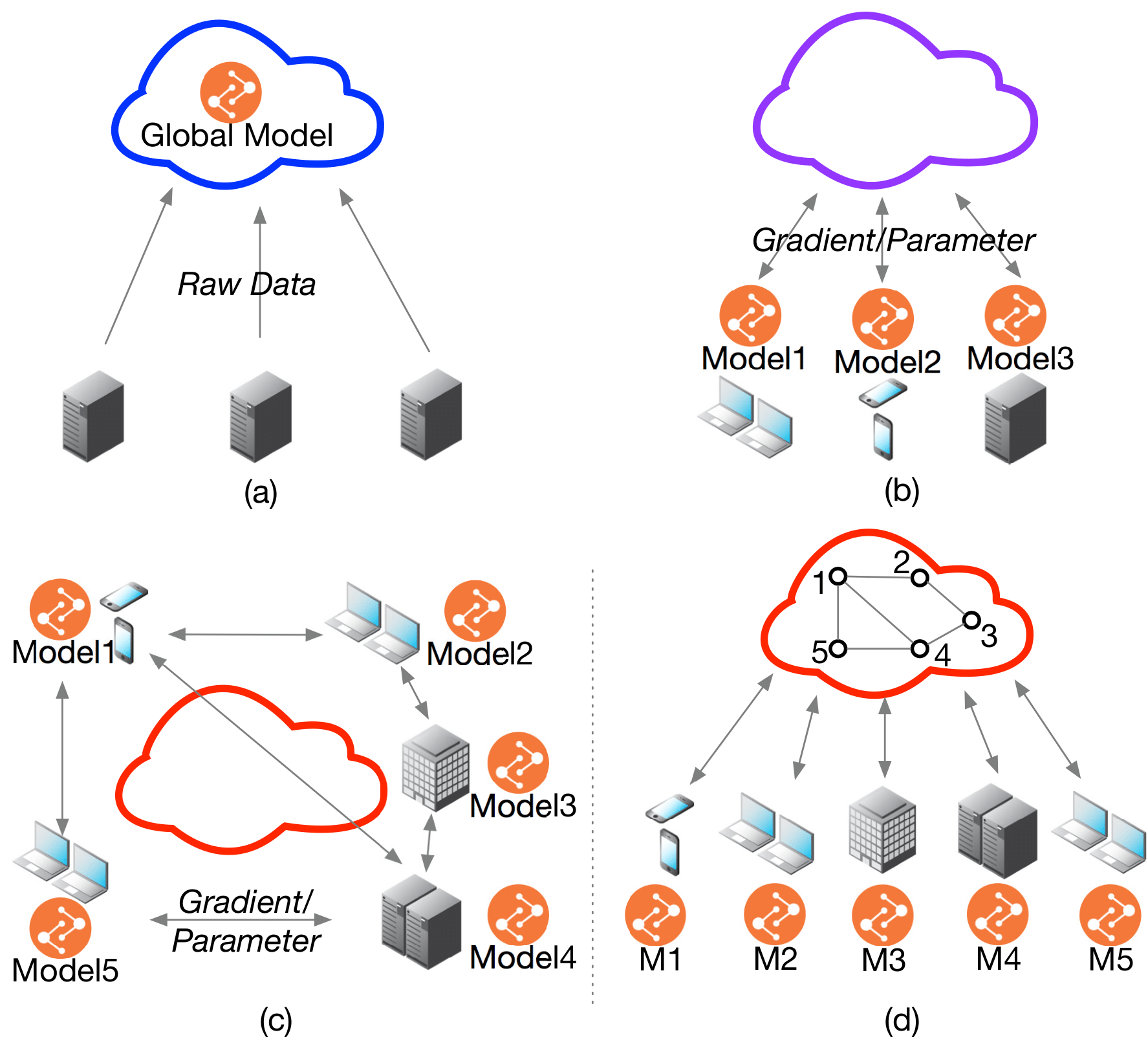

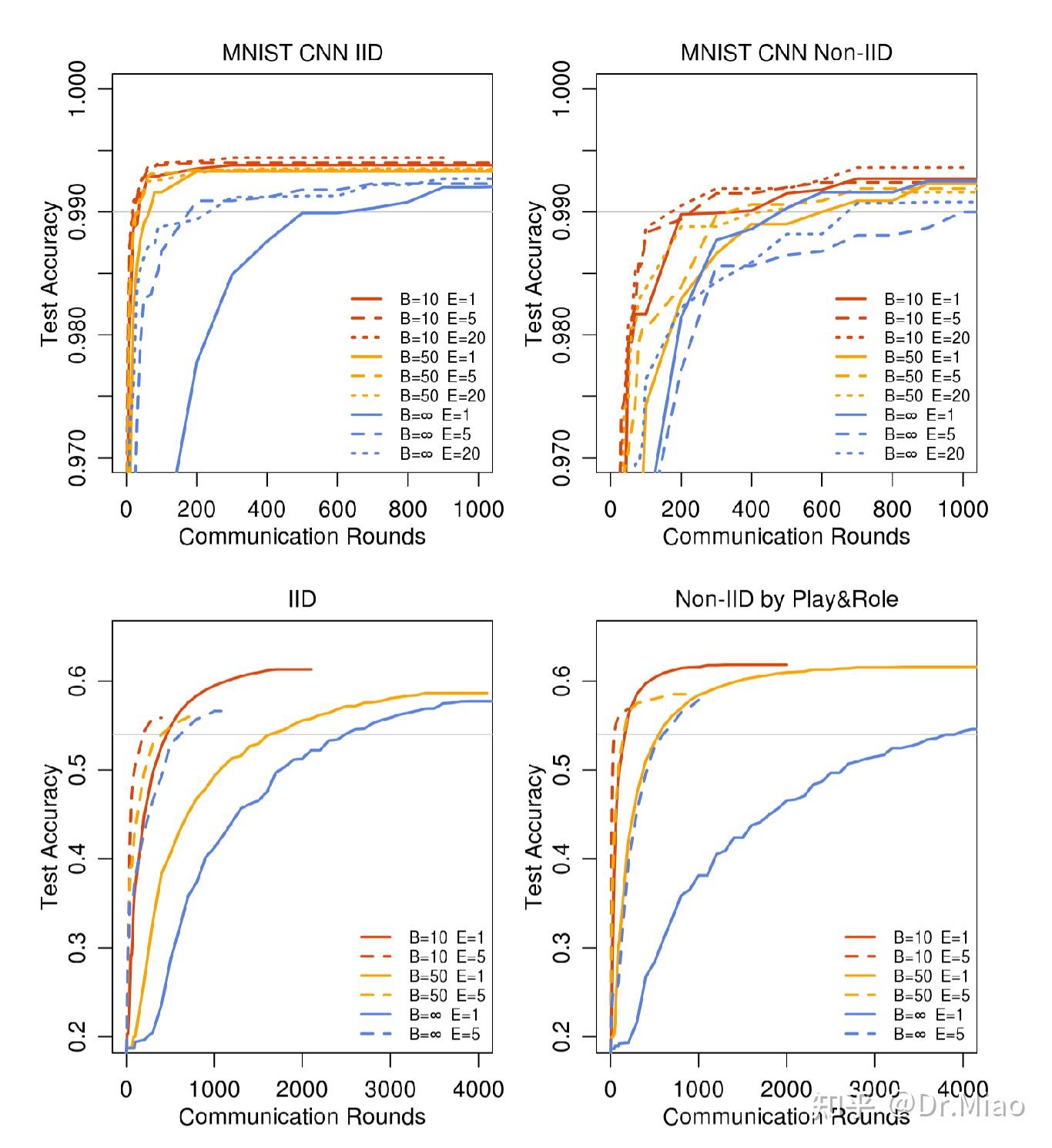

Fig.6: The effect of training for many local epochs between averaging steps 4. Conclusion. In this article, I review about Federated Learning and FedAvg algorithm. Federated learning is a powerful.

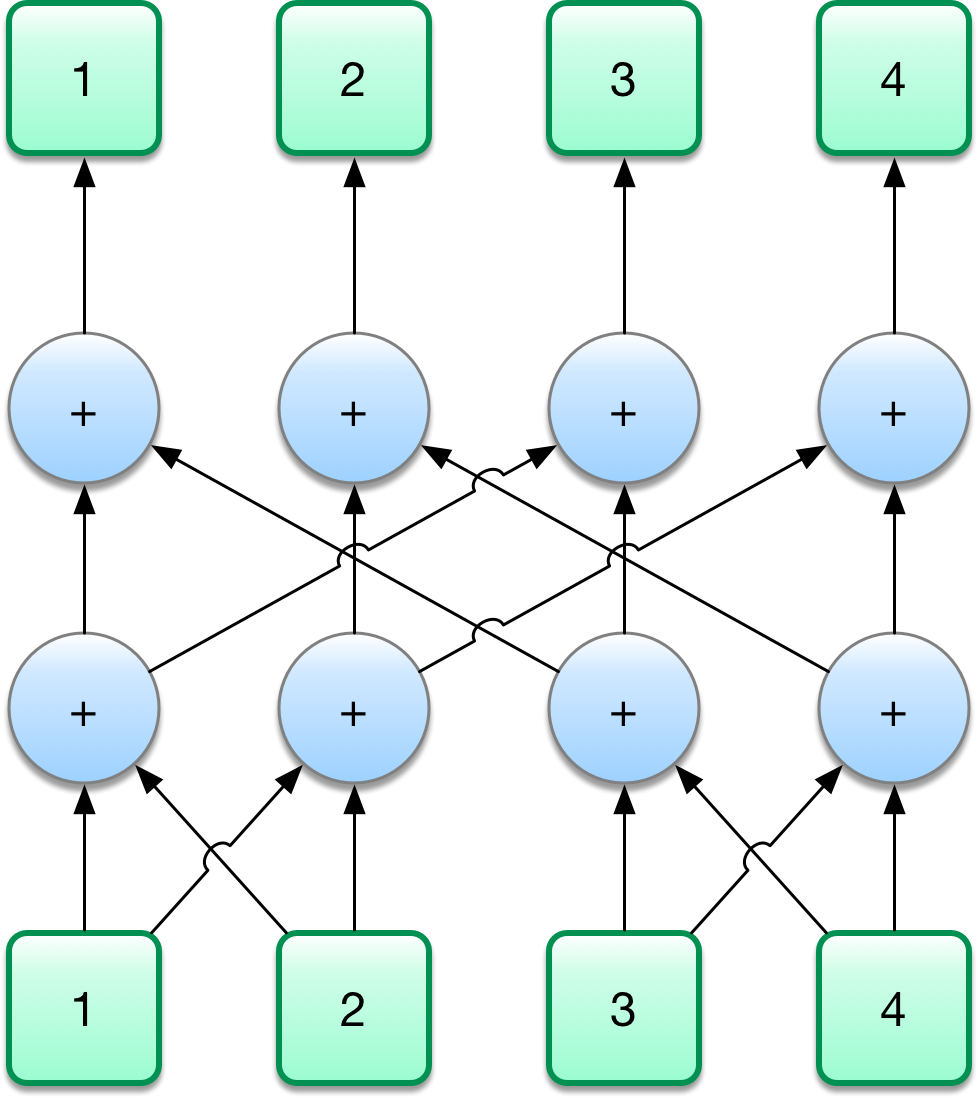

Communication Primitives in Deep Learning Frameworks

Communication-efficient learning of deep networks from decentralized data.. Communication-efficient and differentially-private distributed SGD. N Agarwal, AT Suresh, FXX Yu, S Kumar, B McMahan. 2018. 480: 2018: Practical secure aggregation for federated learning on user-held data. K Bonawitz, V Ivanov, B Kreuter, A Marcedone, HB McMahan.

CommunicationEfficient Learning of Deep Networks from Decentralized DataCSDN博客

Communication-efficient learning of deep networks from decentralized data HB. McMahan, E. Moore, D. Ramage. arXiv preprint 2016 [] Key Areas Distributed Artificial IntelligencModern mobile devices have access to a wealth of data suitable for learning models, which in turn can greatly improve the user experience on the device.

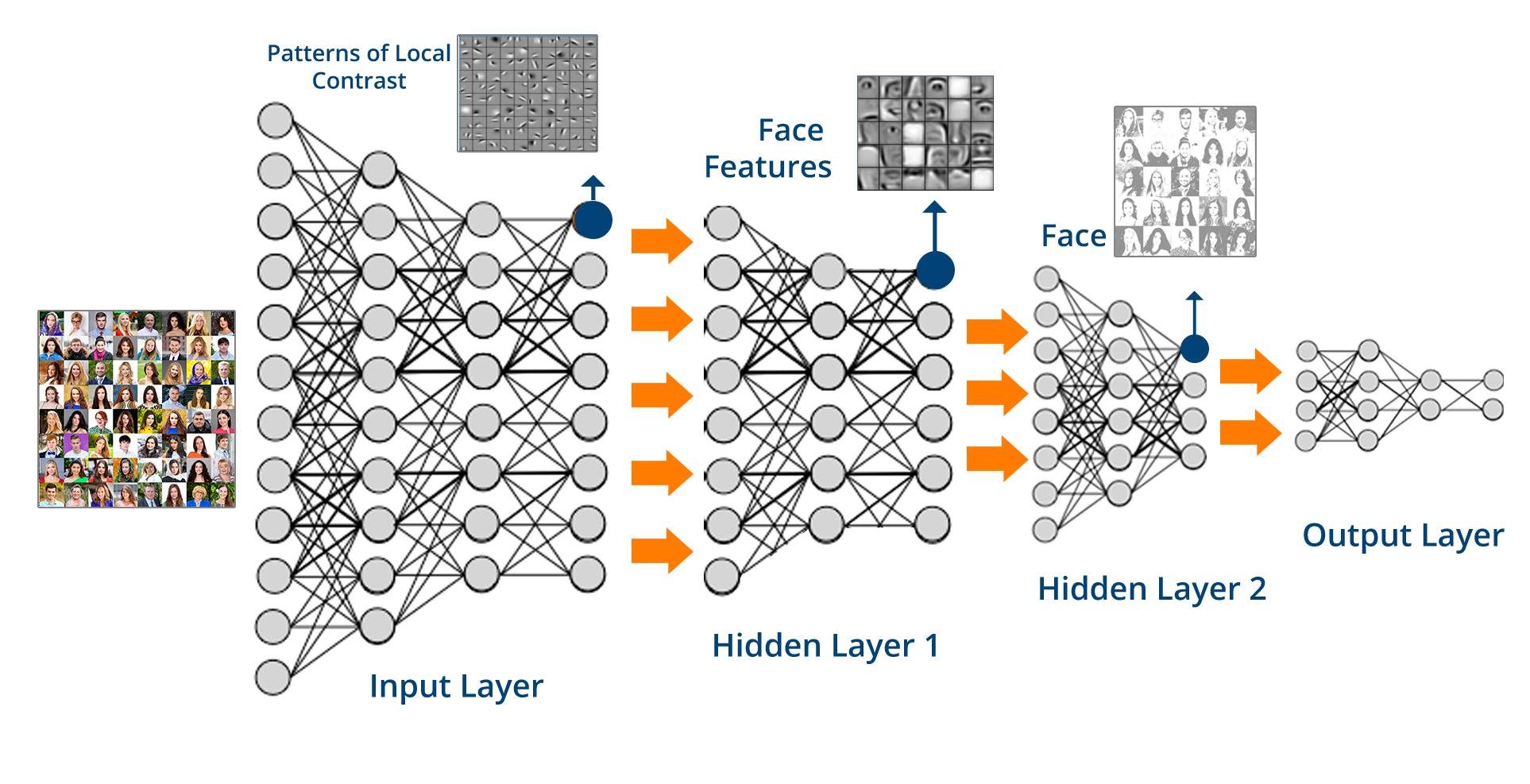

Deep Learning Networks 7 Awesome Types of Deep Learning Networks

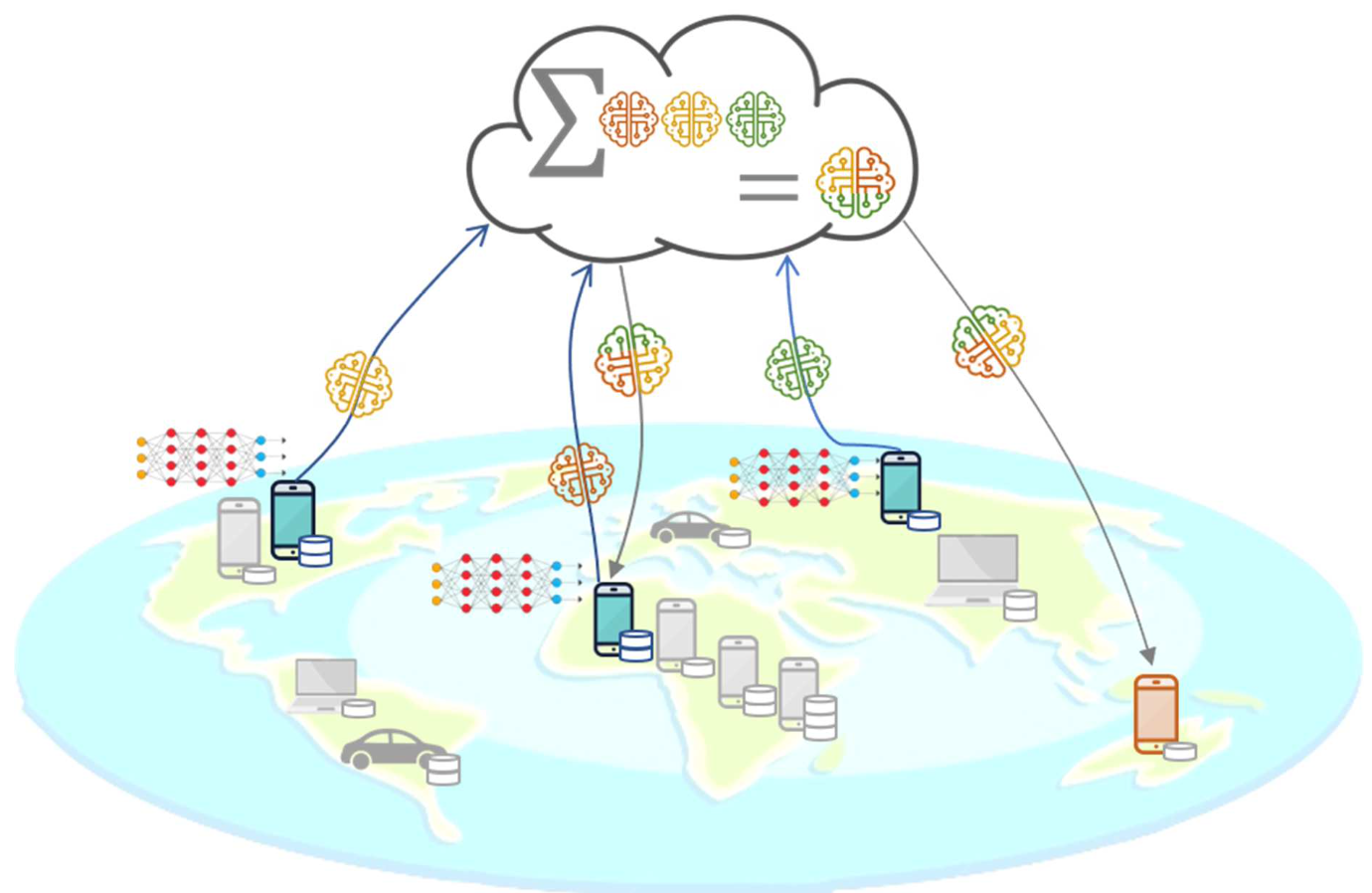

Communication-Efficient Learning of Deep Networks from Decentralized Data. Modern mobile devices have access to a wealth of data suitable for learning models, which in turn can greatly improve the user experience on the device. For example, language models can improve speech recognition and text entry, and image models can automatically select.

Create Deep Learning And Neural Network Models With Keras And Tensorflow lupon.gov.ph

We term this decentralized approach Federated Learning. We present a practical method for the federated learning of deep networks based on iterative model averaging, and conduct an extensive empirical evaluation, considering five different model architectures and four datasets. These experiments demonstrate the approach is robust to the.

Figure 2 from CommunicationEfficient Learning of Deep Networks from Decentralized Data

ancing, communication-efficient training I. INTRODUCTION Effective training of Deep Neural Networks (DNNs) often requires access to a vast amount of data typically unavailable on a single device. Transferring data from different devices (a.k.a., clients or agents) to a central server for training raises

Centralized vs Decentralized vs Distributed a quick overview by Julio Marín Medium

type: Conference or Workshop Paper. metadata version: 2019-04-03. Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, Blaise Agüera y Arcas: Communication-Efficient Learning of Deep Networks from Decentralized Data. AISTATS 2017: 1273-1282. Bibliographic details on Communication-Efficient Learning of Deep Networks from Decentralized Data.

CommunicationEfficient Learning of Deep Networks from Decentralized Data 穷酸秀才大草包 博客园

Figure 9: Test accuracy versus number of minibatch gradient computations (B = 50). The baseline is standard sequential SGD, as compared to FedAvgwith different client fractions C (recall C = 0 means one client per round), and different numbers of local epochs E. - "Communication-Efficient Learning of Deep Networks from Decentralized Data"

Centralized vs. Decentralized Digital Networks Understanding The Differences Blockchain

Modern mobile devices have access to a wealth of data suitable for learning models, which in turn can greatly improve the user experience on the device For example, language models can improve speech recognition and text entry, and image models can automatically select good photos However, this rich data is often privacy sensitive, large in quantity, or both, which may preclude logging to the.

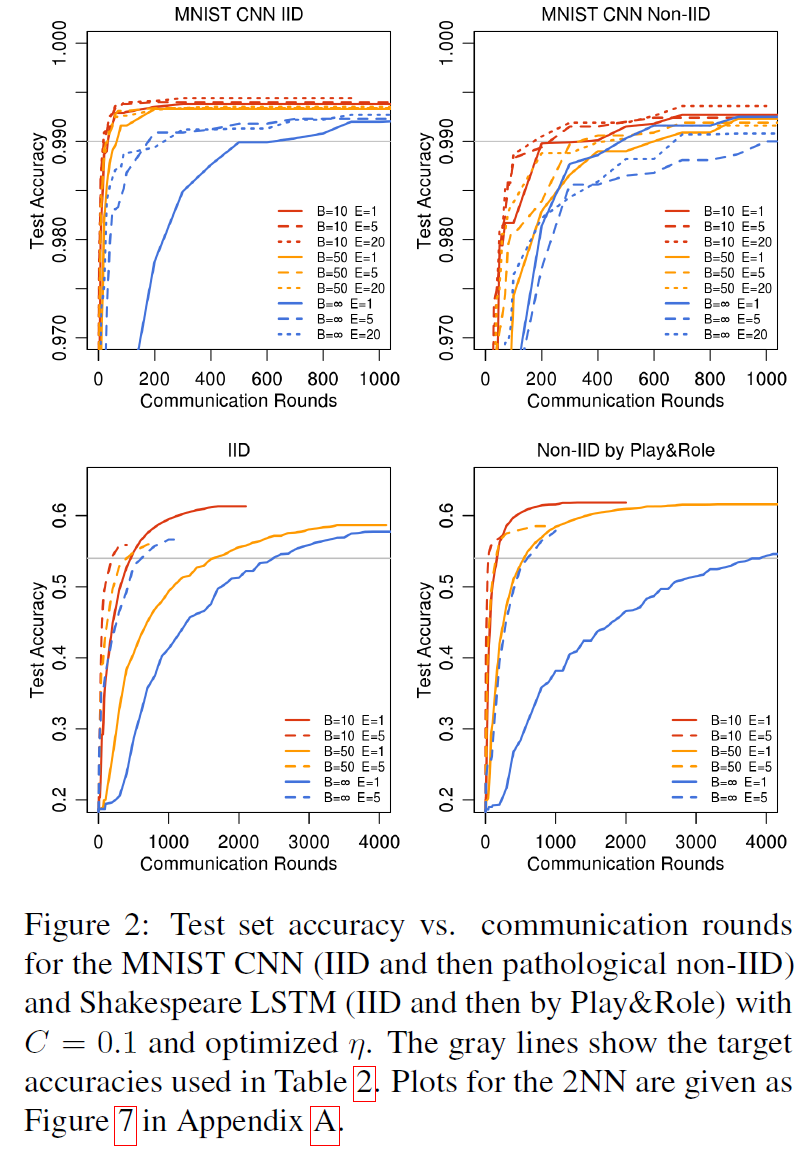

Decentralized Federated MultiTask Learning and System Design Zhengyu Yang

Figure 2: Test set accuracy vs. communication rounds for the MNIST CNN (IID and then pathological non-IID) and Shakespeare LSTM (IID and then by Play&Role) with C = 0.1 and optimized η. The gray lines show the target accuracies used in Table 2. Plots for the 2NN are given as Figure 7 in Appendix A. - "Communication-Efficient Learning of Deep Networks from Decentralized Data"

(PDF) CommunicationEfficient Learning of Deep Networks from Decentralized Data (2017) H

Implementation of the vanilla federated learning paper : Communication-Efficient Learning of Deep Networks from Decentralized Data. Experiments are produced on MNIST, Fashion MNIST and CIFAR10 (both IID and non-IID). In case of non-IID, the data amongst the users can be split equally or unequally.

The Data Scientist

We term this decentralized approach Federated Learning. We present a practical method for the federated learning of deep networks based on iterative model averaging, and conduct an extensive empirical evaluation, considering five different model architectures and four datasets. These experiments demonstrate the approach is robust to the.

Learning of Deep Networks from Decentralized Data

Fixed set of K clients. Each round, random fraction of C clients selected. The server sends the current global model to each client. Each client performs a local computation on the given global state and produces an update. Each update from each client is aggregated to the global model/updated to central server. All steps are repeated.

Communication efficient Learning of Deep Networks from Decentralized Data YouTube

Federated Learning . This is partly the reproduction of the paper of Communication-Efficient Learning of Deep Networks from Decentralized Data Only experiments on MNIST and CIFAR10 (both IID and non-IID) is produced by far. Note: The scripts will be slow without the implementation of parallel computing. Requirements. python>=3.6 pytorch>=0.4. Run

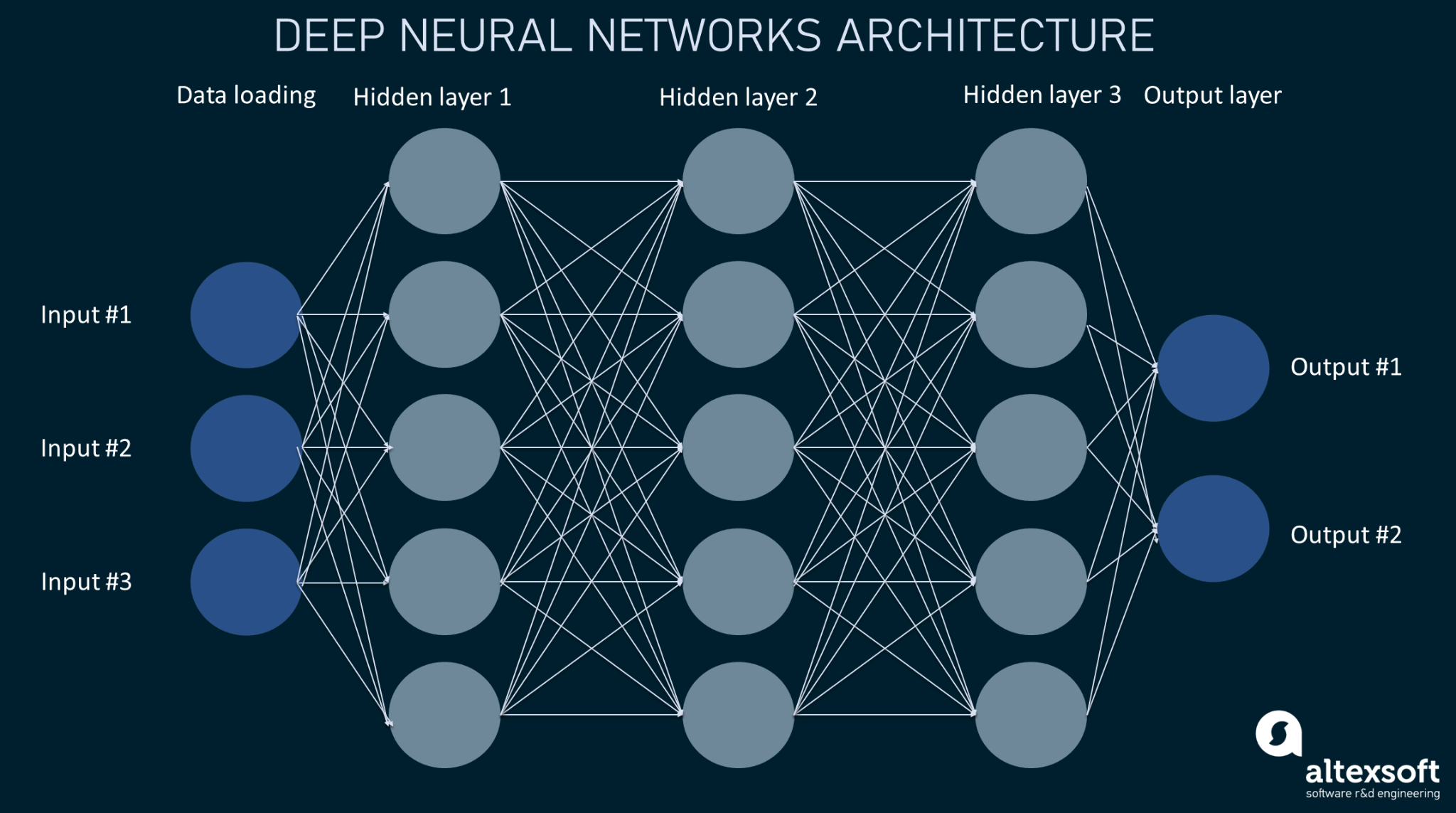

Deep Learning and the Future of Machine Learning AltexSoft

We term this decentralized approach Federated Learning. We present a practical method for the federated learning of deep networks based on iterative model averaging, and conduct an extensive empirical evaluation, considering five different model architectures and four datasets. These experiments demonstrate the approach is robust to the.

[Paper Explain] CommunicationEfficient Learning of Deep Networks from Decentralized Data AI

This work presents a practical method for the federated learning of deep networks based on iterative model averaging, and conducts an extensive empirical evaluation, considering five different model architectures and four datasets. Modern mobile devices have access to a wealth of data suitable for learning models, which in turn can greatly improve the user experience on the device. For example.